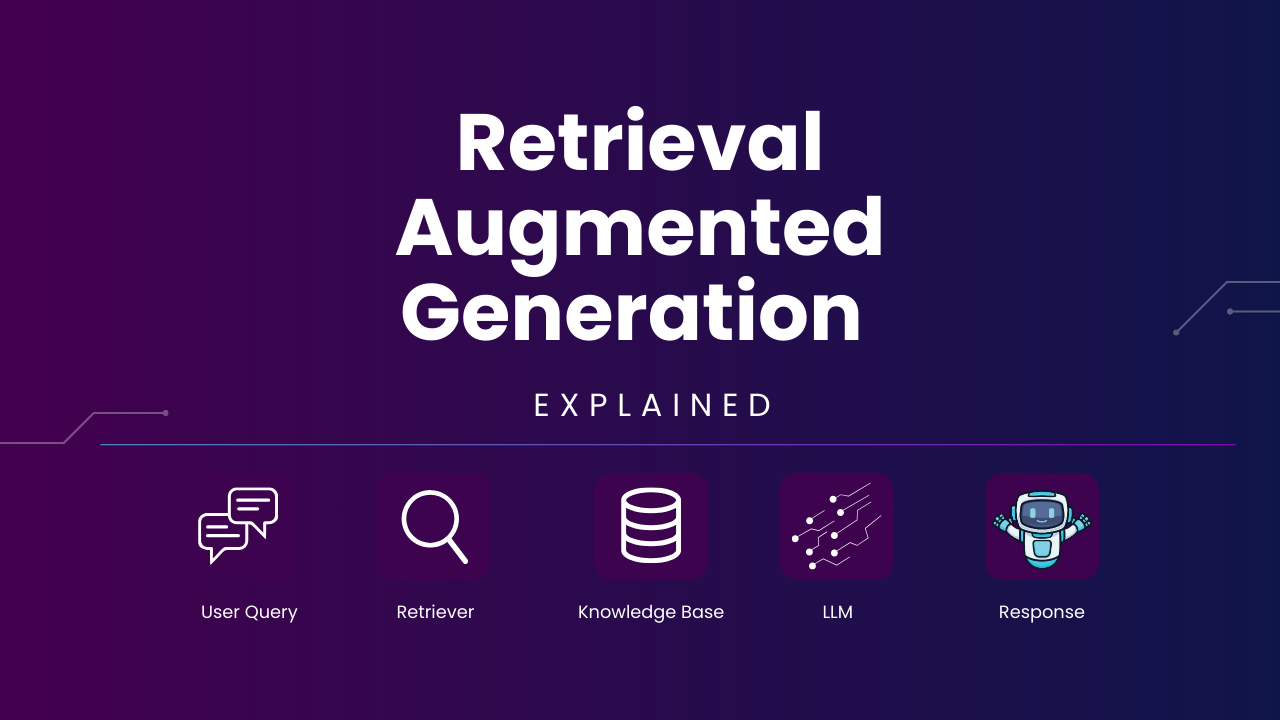

Diving deeper into the world of large language models (LLMs), the term RAG—Retrieval Augmented Generation is often used. Let’s break it down: What RAG is, How it functions, and Why it’s such a transformative technology in Artificial Intelligence.

How Does RAG Work?

RAG employs two main components:

- Retriever: This part of the model searches through the data and pull out relevant documents.

- Generator: Once the relevant documents are retrieved, the generator uses this information to craft responses or generate text.

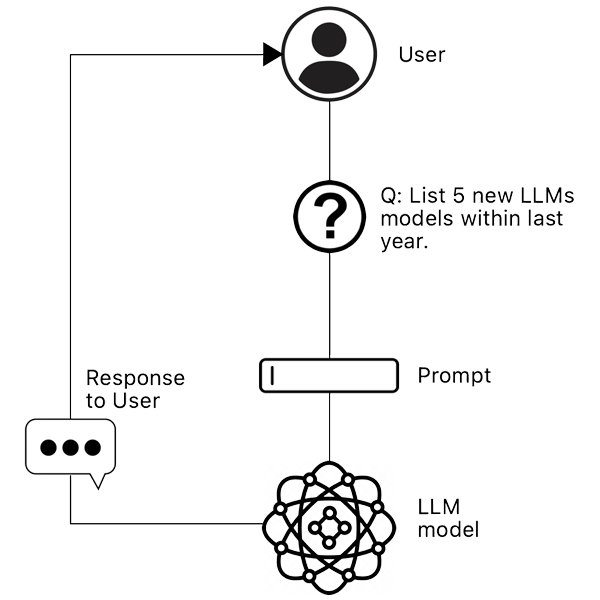

Generation

When a user asks a question, the LLM model provides a confident response based on the parameters that it has learned during training.

The issue here is that the Response may not be accurate as data may evolve with time.

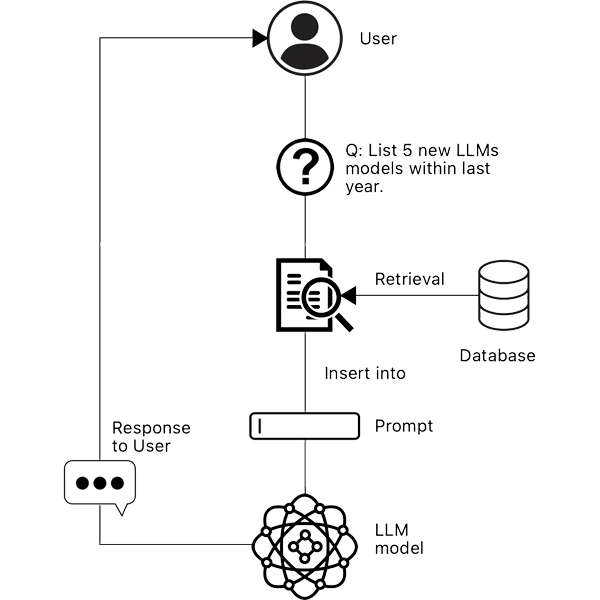

RETRIEVAL AUGMENTED

When a user asks a question, we can provide a content store, say the internet, and combine that into the prompt.

Now, the LLM can provide relevant response with evidence.

Advantages

- Model hallucinates less

- Model response can be accurate and positive

- Model says “I don’t know” if the user’s question cannot be reliably answered.