Recently, Large Language Models like ChatGPT have gained immense popularity, powering diverse applications. However, their reliance on cloud computation and API services can quickly escalate costs per user. To address this, innovative strategies are crucial for optimizing efficiency and affordability. Here are the top 7 strategies being used today:

Semantic Caching

Semantic caching enhances LLM-powered applications by storing previously generated responses, reducing latency and costs associated with repetitive queries. By leveraging cached responses for similar prompts, developers ensure faster user interactions and optimize API usage efficiently.

Summarizing Long Conversations

Summarizing long conversations in LLM applications reduces token usage and enhances efficiency. Techniques like LangChain’s Conversation Summary Memory automatically condense dialogue, preserving essential context while minimizing redundant information, thus improving performance and latency.

Compressing Prompts

Removing unnecessary tokens, significantly reducing the length of input queries without compromising accuracy. This approach enhances the efficiency of LLM inference, particularly useful in applications where shorter prompts lead to faster responses and lower operational costs.

Using Cheaper Models

Opting for more affordable LLM models reduces operational costs while maintaining performance quality. By leveraging lite weight LLM models developers can route appropriate tasks to economical models, achieving cost-effective AI solutions.

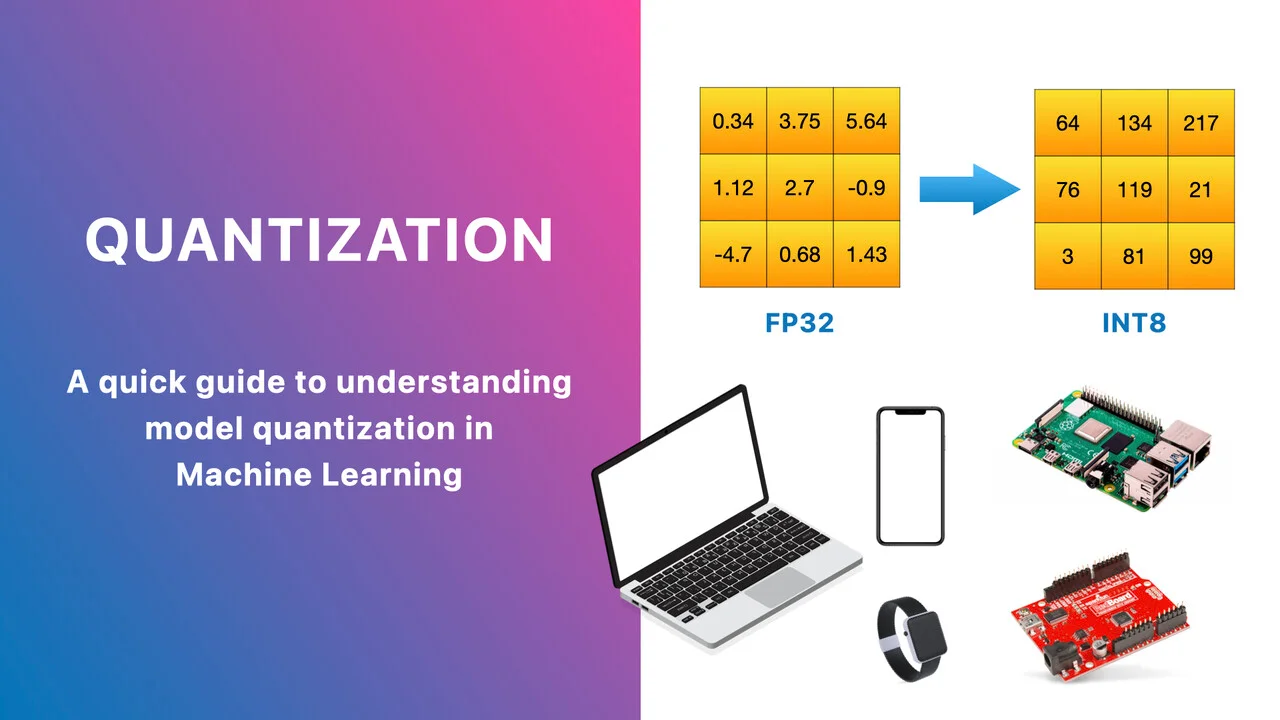

Fine-tuning

Fine-tuning LLMs improves model performance and reduces latency by adapting to specific task requirements. This approach enhances accuracy and efficiency compared to using generalist models, optimizing resource utilization in production environments.

Condensing JSON

For applications requiring structured data output, instructing LLMs to return JSON responses in a single-line format with zero whitespaces optimizes token usage. This practice reduces token consumption by eliminating formatting overhead, enhancing efficiency in document extraction and API responses.

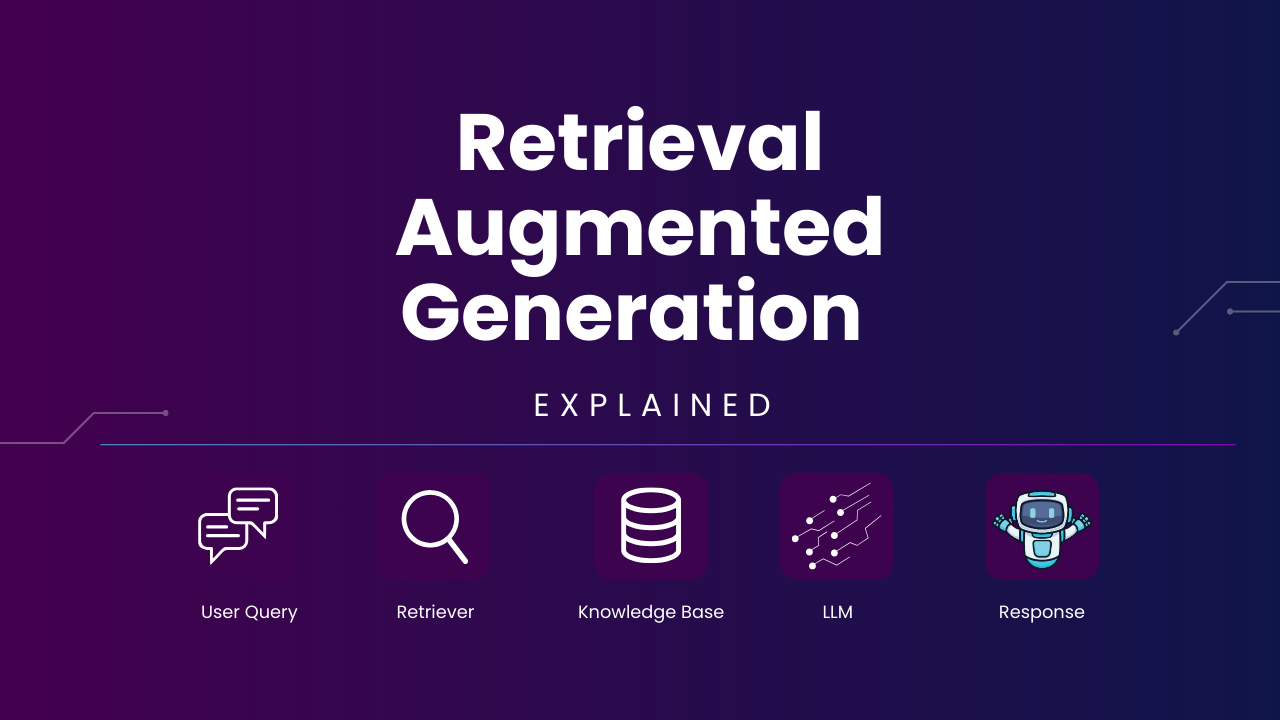

Context Tuning

Implementing smart context retrieval systems enhances LLM applications’ performance by improving the relevance and accuracy of retrieved information. Techniques like adjusting system prompts and refining text splitting methods ensure that LLMs utilize context effectively, minimizing noise and enhancing semantic search capabilities.

Embracing these strategies empowers LLM applications to achieve optimal performance and economic sustainability.